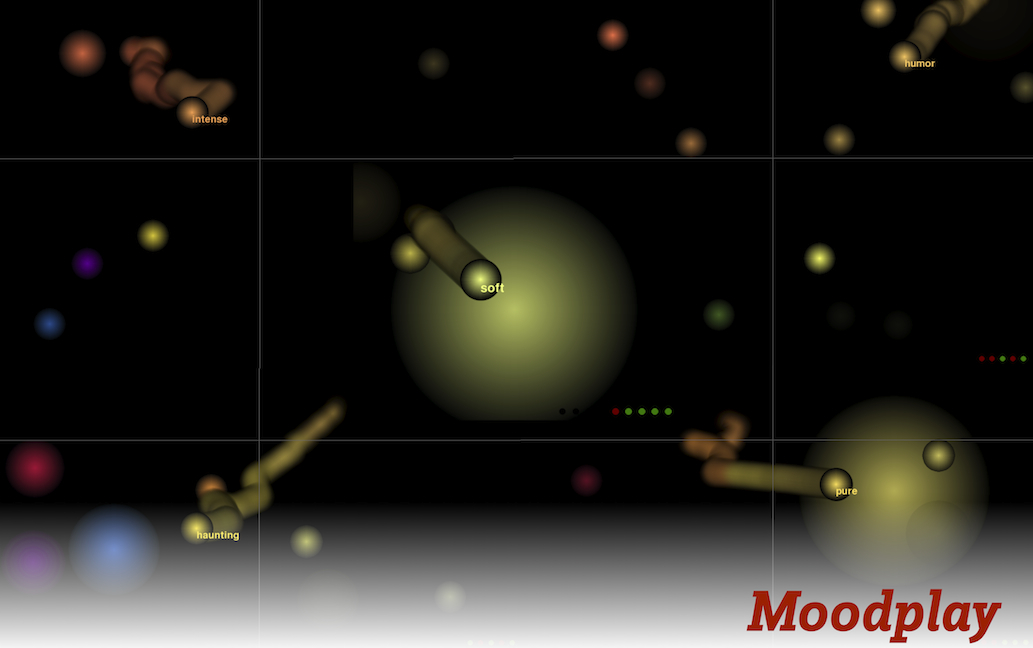

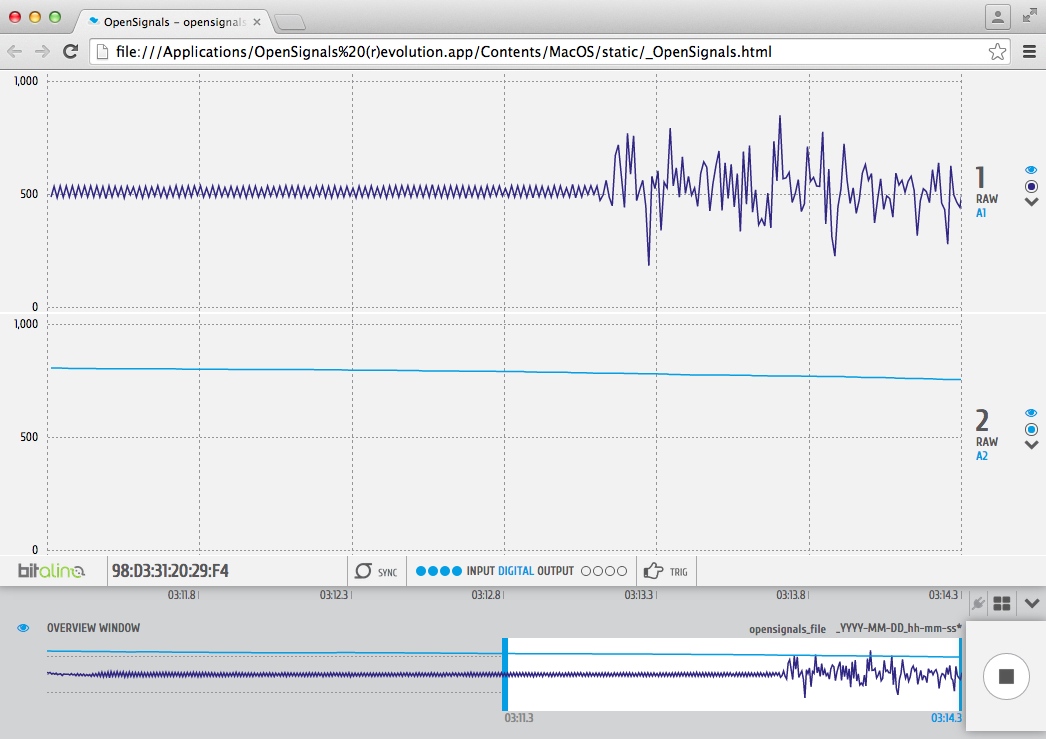

MoodBox is a collaborative music jukebox that plays music following the emotions felt by users. We derive correlates of human emotions from physiological signals measured using wearable sensors. Measures of arousal (excitation) and valence (pleasantness) are computed from EEG signals (Neuroelectrics Enobio) as well as EDA and EMG signals (Biosignalsplux Bitalino). The arousal and valence states monitored on users over time using the wearable sensors are sent back to our web server at Queen Mary University of London and act as votes in the music selected by MoodBox. We use our mood recognition technology to map a collection of 10,000 tracks (I Like Music) to the arousal/valence space relying on affective computing and semantic web techniques. The tracks are processed by Audio FX (distortion, reverb, EQ) the parameters of which are modulated based on the emotional information collected on users (e.g. angry: more distortion, relaxing: more reverb). Our visualiser displays the emotional states monitored from users in the two-dimensional arousal/valence space and the name of the tracks which is played back according to how users feel on average!

Technologies used:

NEfeaturesXtractor, OpenSignals Bitalino, Python, REST API, SuperCollider

If you are interested in the project, please contact us:

Mathieu Barthet (m.barthet@qmul.ac.uk)

George Fazekas (gyorgy.fazeaks@eecs.qmul.ac.uk)

Alo Allik (a.allik@qmul.ac.uk)

Acknowledgements:

This project was partly funded by the Fusing Audio and Semantic Technologies for Intelligent Music Production and Consumption (FAST IMPACt) EPSRC Grant EP/L019981/1 @semanticaudio